Can a robot become a movie director?

International team of researchers develop a fully autonomous cinematography system for aerial drones with the aim of letting the onboard artificial intelligence completely take over the film directing.

Ever since the introduction of aerial drones, film makers have been using them for the bird’s eye angles previously dominated by expensive helicopter shots.

However, new technology is now putting the power of a film director in the hands of the onboard artificial intelligence of an aerial drone – complete with a fully autonomous system for cinematography that learns humans’ visual preferences.

The new technology is currently being developed by a team of researchers from the Department of Engineering, Aarhus University, the Carnegie Mellon University in Pennsylvania, the Technical University of Munich, and Nanyang Technological University, Singapore.

“The drone is learning how to film in a way, that pleases our visual preferences for cinematic art. That means, that the drone has to autonomously understand, which viewpoints will make for the most artistic and interesting scene while simultaneously incorporating the entire context of the scene to e.g. avoid obstacles and be aware of actors,” says Associate Professor Erdal Kayacan, who’s leading the Danish part of the project.

Deep learning from user votes

The ‘most interesting scene’ is often quite subjective, and many film directors will probably quabble over which particular bird’s eye scene is more interesting than the other. Because of this, the autonomous drone uses deep reinforcement learning – a technique based on human inputs from simulation studies.

In the studies, people score simulated scenes based on on-screen camera angles and actor positions in order to learn the artificial intelligence a generalizable behaviour for shooting the most interesting actor movements and scenes.

(The article continues below the video)

“Today, commercial autonomous drone products usually follow the clear, safe path behind the actor allowing only for a continuous backshot. That’s not very interesting, which is why we’re teaching the drone to constantly find the optimal perspective for the best audience experience,” says Erdal Kayacan.

Smart detection and planning

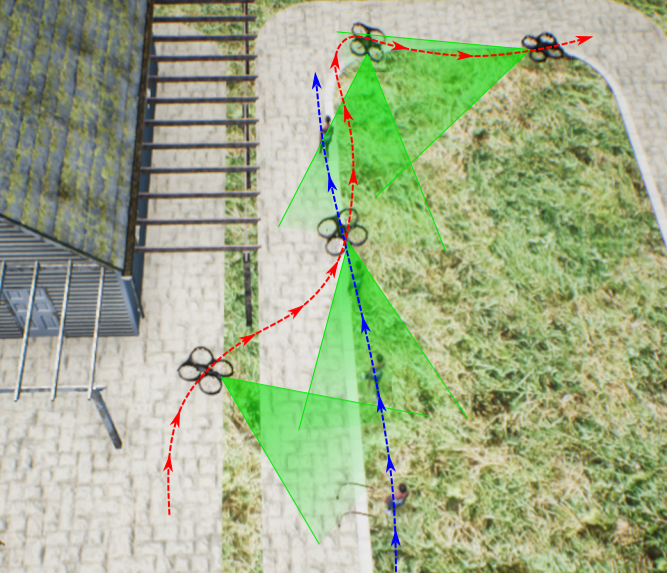

Simultaneously, the drone automatically detects occlusions and can actually quantify them in order to maintain a clear view of the actor. It is also able to anticipate actor movement and plan ahead its own trajectory while continuously mapping the environment using LiDAR.

Furthermore, the technology requires no scripted scenes, no GPS tags or maps of the environment to navigate the shooting.

The autonomous drone filming system is not intended for film making alone and Associate Professor Erdal Kayacan imagines many different scenarios where the technology can be used:

“Traffic monitoring, crowd monitoring, constructions sites, for security reasons or police applications. Drones are used for a wide array of applications today but require a lot of attention. Our system could be taught to make the shots optimal for many other applications than film directing,” he says.

The project – which is also a best paper candidate — will be presented at the 2019 International Conference on Intelligent Robots and Systems (IROS) going on in China from November 4-8.

Contact

Mail: erdal@eng.au.dk

Phone: +45 93521062